John Hopfield, former MBL Faculty, Wins Nobel Prize in Physics for Artificial Neural Network Development

John Hopfield of Princeton University, former faculty in the MBL's Methods in Computational Neuroscience course, and Geoffrey Hinton of University of Toronto have been awarded the Nobel Prize in Physics "for foundational discoveries and inventions that enable machine learning with artificial neural networks," the Royal Swedish Academy of Sciences announced today.

"When we talk about artificial intelligence (AI), we often mean machine learning using artificial neural networks (ANNs). This year’s laureates have conducted important work with ANNs from the 1980s onward," the Academy stated.

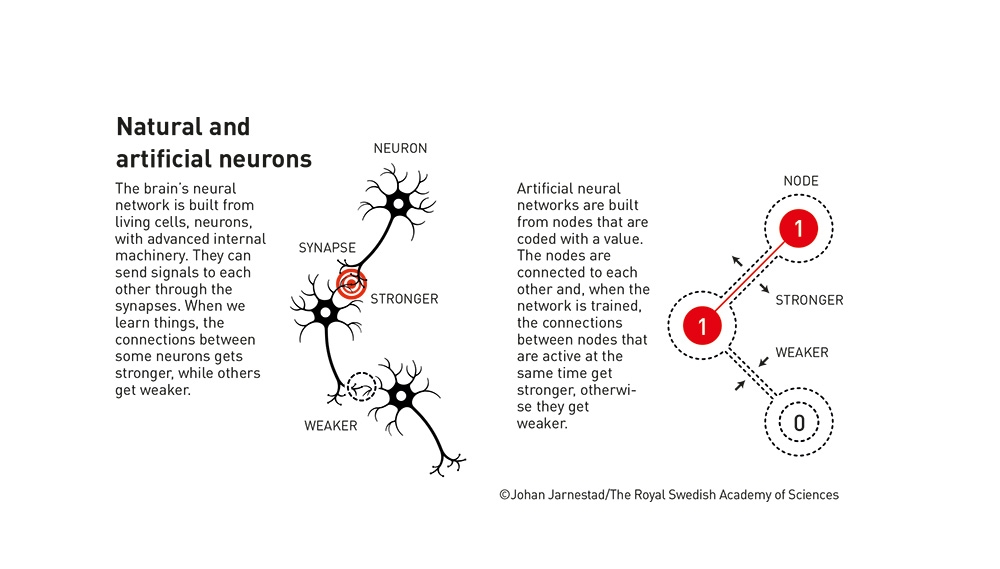

Inspired by the structure of the brain, Hopfield and Hinton used the tools of physics to develop this technology.

"John Hopfield created a [network] structure that can store and reconstruct information. Geoffrey Hinton invented a method that can independently discover properties in data, which has become important for the large artificial neural networks now in use," the Academy stated.

"Hinton and Hopfield received the Nobel Prize in Physics for their beautiful and elegant work, but this Nobel is also a recognition of the entire community that is working on computational and theoretical neuroscience," said Stefano Fusi of Columbia University, co-director of the MBL Methods in Computational Neuroscience (MCN) course.

"Hinton is widely considered the father of modern AI, and he is one of the pioneers who greatly contributed from the very beginning to the development of complex artificial neural networks. Hopfield not only proposed one of the first and most important models of associative memory implemented with recurrent neural networks, but he also attracted numerous theoretical physicists to neuroscience, creating a community of theorists who laid the foundations of computational neuroscience," Fusi said.

Hopfield was on the MCN course faculty in 1994 and from 1996 to 2001.

The following is drawn from scientific background provided by the Academy:

Inspired by biological neurons in the brain, artificial neural networks are large collections of 'neurons,' or nodes, connected by 'synapses,' or weighted couplings, which are trained to perform certain tasks rather than asked to execute a predetermined set of instructions. Their basic structure has close similarities with spin models in statistical physics applied to magnetism or alloy theory.

In the early 1980s, John Hopfield invented a network that can store patterns and has a method for recreating them. When the network is given an incomplete or slightly distorted pattern, the method can find the stored pattern that is most similar ("associative memory").

We can imagine the nodes in the Hopfield network as pixels. The network describes a material due to its atomic spin – a property that makes each atom a tiny magnet. The network as a whole is trained by finding values for the connections between the nodes so that the saved images have low energy.

In subsequent years, Hinton created and developed a related technology, the Boltzmann machine, and a technique called backpropogation, an algorithm that lets neural networks learn.

To date, 63 scientists affiliated with the MBL have been awarded a Nobel Prize, almost all of them receiving the prize in Physiology or Medicine or in Chemistry. Other than Hopfield, the one MBL affiliate to receive a Nobel Prize in Physics was Donald Glaser in 1960, an alumnus of and lecturer in the MBL Physiology course, for his development of the bubble chamber.